Computational and Theoretical Neuroscience of L&M

Our approach to computational and theoretical neuroscience is designed to create a synergistic relationship between our experimental and computational research. We develop computational models in parallel with our experimental work, serving two primary functions: to validate our experimental results and to generate novel theoretical hypotheses that can be subsequently tested and validated through new experiments. This tightly integrated, bidirectional approach allows us to tackle fundamental questions about memory across multiple scales of biological organization.

Our past examples on Validation and interpretation of experimental result

This synergistic approach has already proven transformative in expanding our scientific discoveries through interdisciplinary collaboration. Our computational frameworks have consistently revealed hidden principles underlying experimental observations, providing mechanistic explanations that were not apparent from data alone. In each case, modeling transformed descriptive experimental findings into predictive theoretical frameworks that guided subsequent experimental validation.

-

Through biophysical modeling, we uncovered how spatial synaptic organization drives neuronal selectivity (Link).

-

Circuit-level models revealed the computational logic behind memory replay and generalization, leading to experimentally validated predictions about inhibitory plasticity (Link).

-

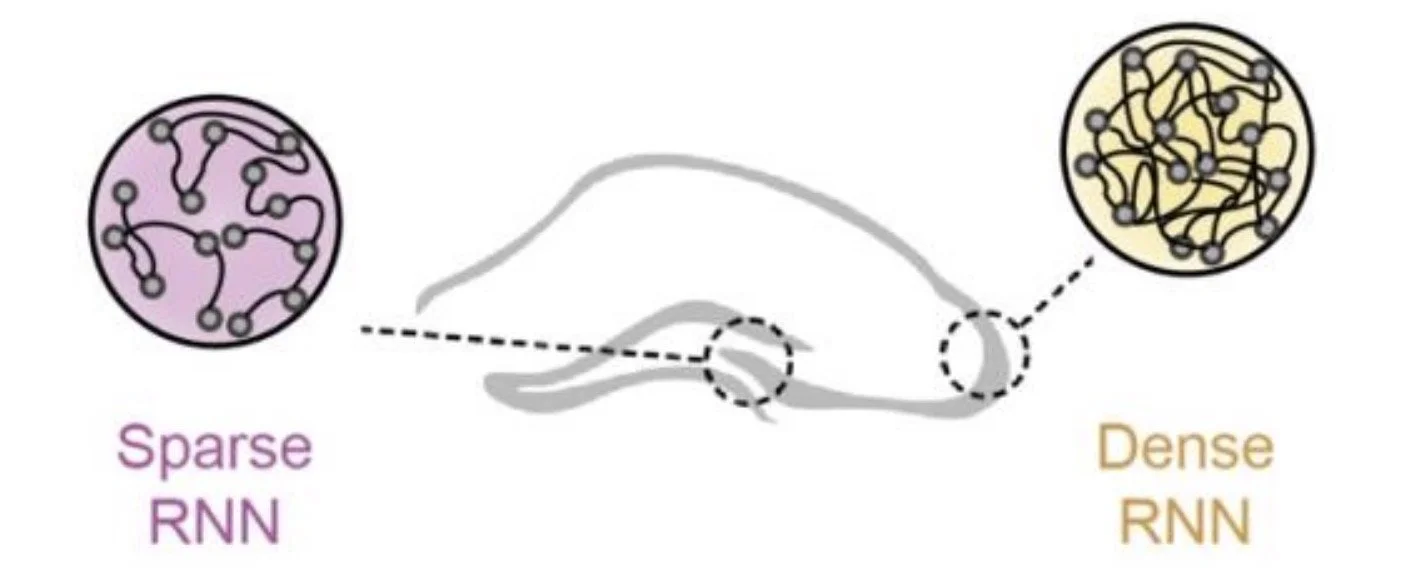

Network modeling demonstrated how connectivity gradients create functionally distinct computational roles within the same brain region (Link)

Development of novel theories and computational inferences

Building on this foundation, our program is also dedicated to developing novel theories that push the boundaries of our understanding of memory. A central theoretical challenge we are currently addressing is the problem of longitudinal memory: how do memories persist and transform over a lifetime? We are investigating this by framing it as a question of how a dynamical system—the brain—can maintain stable information despite the continual turnover and modification of its underlying components (synapses, neurons, and circuits). Understanding this paradox—how a brain that constantly rewrites itself can preserve the structure of past experience—requires a new generation of models that can bridge the gap between microscopic instability and macroscopic behavioral invariance.

To this end, our ongoing theoretical projects are focused on three key areas:

Developing novel methods for modeling longitudinal recordings to better capture the dynamics of memory over extended periods.

Identifying optimal methodologies for the targeted perturbation of neuronal subsets to enhance memory formation.

Investigating the formation of stable-yet-flexible memories in artificial foundational models to derive principles applicable to biological systems.

Internship opportunities

The Program in Memory Longevity's Computational Neuroscience group develops models that bridge the gap between microscopic neural instability and macroscopic behavioral invariance.

Interns work on projects at the intersection of theory and experiment, including: developing methods for modeling longitudinal recordings, implementing neural encoding models and state space analyses, creating data visualization tools for multi-modal calcium imaging data, and building analysis pipelines for large-scale neurophysiological datasets. We seek students with backgrounds in computer science, mathematics, physics, engineering, or computational neuroscience who are excited to tackle fundamental questions about memory through computational approaches.